ONNX

ONNX(开放神经网络交换)是一种导出模型的标准格式,用于将模型(通常是在像 PyTorch 这样的框架中创建的模型)导出,使得这些模型能够在任何地方运行。在 Dragonwing 设备上,您可以使用带有 AI Engine Direct 的 ONNX Runtime 直接在 NPU 上执行 ONNX 模型,以获得最佳性能。

带有 AI Engine Direct 的 onnxruntime wheel

onnxruntime 目前没有针对带有 AI Engine Direct 绑定的 aarch64 Linux 架构发布预构建的安装包(wheel 文件),因此无法直接使用 pip 来安装。你可以在此处下载预构建的 wheel 文件:

(通过 pip3 install onnxruntime_qnn-*-linux_aarch64.whl 安装)

要为其他 onnxruntime 或 Python 版本构建 wheel,请参阅edgeimpulse/onnxruntime-qnn-linux-aarch64。

准备 onnx 文件

NPU 仅支持具有固定输入形状的量化 uint8/int8 模型。如果您的模型未量化,或者有动态输入形状,模型将自动卸载到 CPU。以下是有关准备模型的一些建议。

请查看 available in the PyTorch documentation 获取将 PyTorch 模型导出到 ONNX 的完整教程。

动态形状

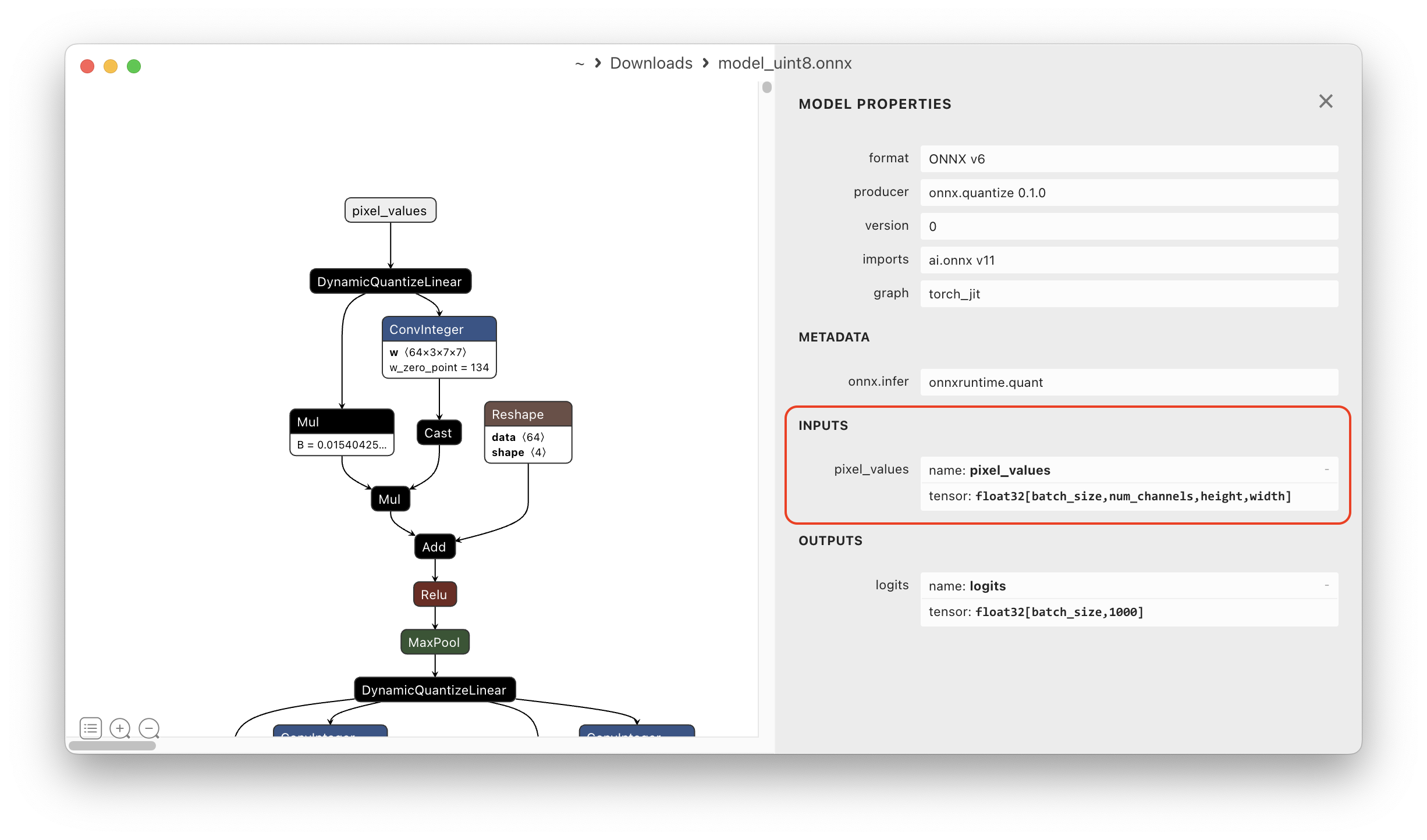

如果模型包含动态形状,则需要先将其变为固定形状。可通过 Netron 查看网络的形状。

例如,此模型具有动态形状:

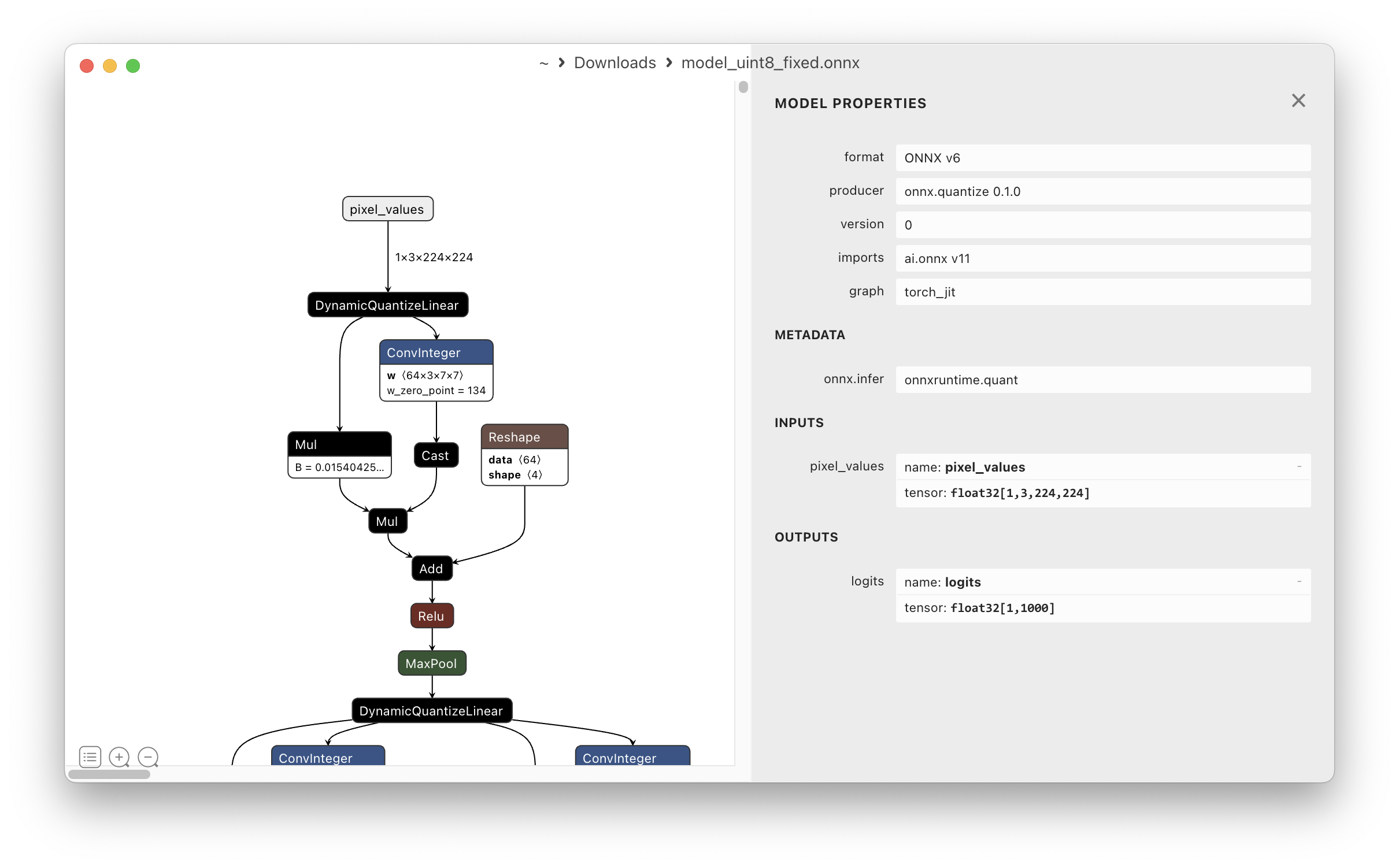

可通过 onnxruntime.tools.make_dynamic_shape_fixed 设置固定形状:

python3 -m onnxruntime.tools.make_dynamic_shape_fixed \

model_without_shapes.onnx \

model_with_shapes.onnx \

--input_name pixel_values \

--input_shape 1,3,224,224

这步之后,您的模型具有固定形状,并可在 NPU 上运行。

量化模型

NPU仅支持uint8/int8量化模型。不支持的模型或不支持的层将自动移回 CPU。有关量化模型的指南,请见 ONNX Runtime docs: Quantize ONNX Models。

不想自己量化模型? 可以从 Qualcomm AI Hub 下载一系列的量化模型,或使用 Edge Impulse 来量化已有的或全新的模型。

在 NPU 上运行模型(Python)

要将模型卸载到 NPU,您只需加载 QNNExecutionProvider 并在创建 InferenceSession 时传入即可。例如:

import onnxruntime as ort

providers = (("QNNExecutionProvider", {

"backend_type": "htp",

"profiling_level": "detailed",

}))

so = ort.SessionOptions()

sess = ort.InferenceSession(MODEL_PATH, sess_options=so, providers=providers)

actual_providers = sess.get_providers()

print(f"Using providers: {actual_providers}") # will show QNNExecutionProvider,CPUExecutionProvider if QNN can be loaded

(确保使用带有 AI Engine Direct 绑定的 onnxruntime wheel,详见页面顶部)

示例:SqueezeNet-1.1 (Python)

在开发板上打开终端,或建立 SSH 会话,然后执行以下操作:

1️⃣ 创建一个新的虚拟环境(venv),并安装 onnxruntime和 Pillow:

python3.12 -m venv .venv-onnxruntime-demo

source .venv-onnxruntime-demo/bin/activate

# onnxruntime with AI Engine Direct bindings (only works on Python3.12)

wget https://cdn.edgeimpulse.com/qc-ai-docs/wheels/onnxruntime_qnn-1.23.0-cp312-cp312-linux_aarch64.whl

pip3 install onnxruntime_qnn-1.23.0-cp312-cp312-linux_aarch64.whl

rm onnxruntime*.whl

# Other dependencies

pip3 install Pillow

2️⃣ 如下为运行 SqueezeNet-1.1 的端到端示例。将此文件保存为 inference_onnx.py:

#!/usr/bin/env python3

import os, sys, time, urllib.request

import numpy as np

from PIL import Image

import onnxruntime as ort

def curr_ms():

return round(time.time() * 1000)

use_npu = True if len(sys.argv) >= 2 and sys.argv[1] == '--use-npu' else False

# Path to your quantized ONNX model and test image (will be download automatically)

MODEL_PATH = "model.onnx"

MODEL_DATA_PATH = "model.data"

IMAGE_PATH = "boa-constrictor.jpg"

LABELS_PATH = "SqueezeNet-1.1_labels.txt"

if not os.path.exists(MODEL_PATH):

print("Downloading model...")

model_url = 'https://cdn.edgeimpulse.com/qc-ai-docs/models/SqueezeNet-1.1_w8a8.onnx'

urllib.request.urlretrieve(model_url, MODEL_PATH)

if not os.path.exists(MODEL_DATA_PATH):

print("Downloading model data...")

model_url = 'https://cdn.edgeimpulse.com/qc-ai-docs/models/SqueezeNet-1.1_w8a8.data'

urllib.request.urlretrieve(model_url, MODEL_DATA_PATH)

if not os.path.exists(LABELS_PATH):

print("Downloading labels...")

labels_url = 'https://cdn.edgeimpulse.com/qc-ai-docs/models/SqueezeNet-1.1_labels.txt'

urllib.request.urlretrieve(labels_url, LABELS_PATH)

if not os.path.exists(IMAGE_PATH):

print("Downloading image...")

image_url = 'https://cdn.edgeimpulse.com/qc-ai-docs/examples/boa-constrictor.jpg'

urllib.request.urlretrieve(image_url, IMAGE_PATH)

with open(LABELS_PATH, 'r') as f:

labels = [line for line in f.read().splitlines() if line.strip()]

providers = []

if use_npu:

providers.append(("QNNExecutionProvider", {

"backend_type": "htp",

}))

else:

providers.append("CPUExecutionProvider")

so = ort.SessionOptions()

sess = ort.InferenceSession(MODEL_PATH, sess_options=so, providers=providers)

actual_providers = sess.get_providers()

print(f"Using providers: {actual_providers}") # Show which providers are actually loaded

inputs = sess.get_inputs()

outputs = sess.get_outputs()

def load_image_for_onnx(path, H, W):

img = Image.open(path).convert("RGB").resize((W, H))

arr = np.array(img)

arr = arr.astype(np.float32) / 255.0

arr = np.transpose(arr, (2, 0, 1)) # HWC -> CHW

arr = np.expand_dims(arr, 0) # -> NCHW

return arr

# input data scaled 0..1

input_data_f32 = load_image_for_onnx(path=IMAGE_PATH, H=224, W=224)

# quantize model (cannot read these params from the onnx model I believe)

scale = 1.0 / 255.0

zero_point = 0

input_data_u8 = np.round(input_data_f32.astype(np.float32) / float(scale)) + int(zero_point)

input_data_u8 = np.clip(input_data_u8, 0, 255).astype(np.uint8)

# Warmup once

_ = sess.run(None, {sess.get_inputs()[0].name: input_data_u8})

# Run 10x so we can calculate avg. runtime per inference

start = curr_ms()

for i in range(10):

out = sess.run(None, {sess.get_inputs()[0].name: input_data_u8})

end = curr_ms()

# Image classification models in AI Hub miss a Softmax() layer at the end of the model, so add it manually

def softmax(x, axis=-1):

# subtract max for numerical stability

x_max = np.max(x, axis=axis, keepdims=True)

e_x = np.exp(x - x_max)

return e_x / np.sum(e_x, axis=axis, keepdims=True)

scores = softmax(np.squeeze(out[0], axis=0))

# Take top 5

top_k_idx = scores.argsort()[-5:][::-1]

print("\nTop-5 predictions:")

for i in top_k_idx:

label = labels[i] if i < len(labels) else f"Class {i}"

print(f"{label}: score={scores[i]}")

print("")

print(f"Inference took (on average): {(end - start) / 10:.2f} ms per image")

注意:该脚本具有硬编码的量化参数。如果更换模型,可能需要更改这些参数。

3️⃣ 在 CPU 上运行模型:

python3 inference_onnx.py

# Top-5 predictions:

# common iguana: score=0.3682704567909241

# night snake: score=0.1186317503452301

# water snake: score=0.1186317503452301

# boa constrictor: score=0.0813227966427803

# bullfrog: score=0.0813227966427803

#

# Inference took (on average): 6.50 ms per image

4️⃣ 在 NPU 上运行模型:

python3 inference_onnx.py --use-npu

# Top-5 predictions:

# common iguana: score=0.30427297949790955

# water snake: score=0.11838366836309433

# night snake: score=0.11838366836309433

# boa constrictor: score=0.11838366836309433

# rock python: score=0.08115273714065552

#

# Inference took (on average): 1.60 ms per image

正如所见,该模型在 NPU 上运行速度明显更快,但模型的输出略有变化。

提示与技巧

禁用 CPU 回退

为了进行调试,可能需要选择通过以下方式禁用CPU回退:

so = ort.SessionOptions()

so.add_session_config_entry("session.disable_cpu_ep_fallback", "1")